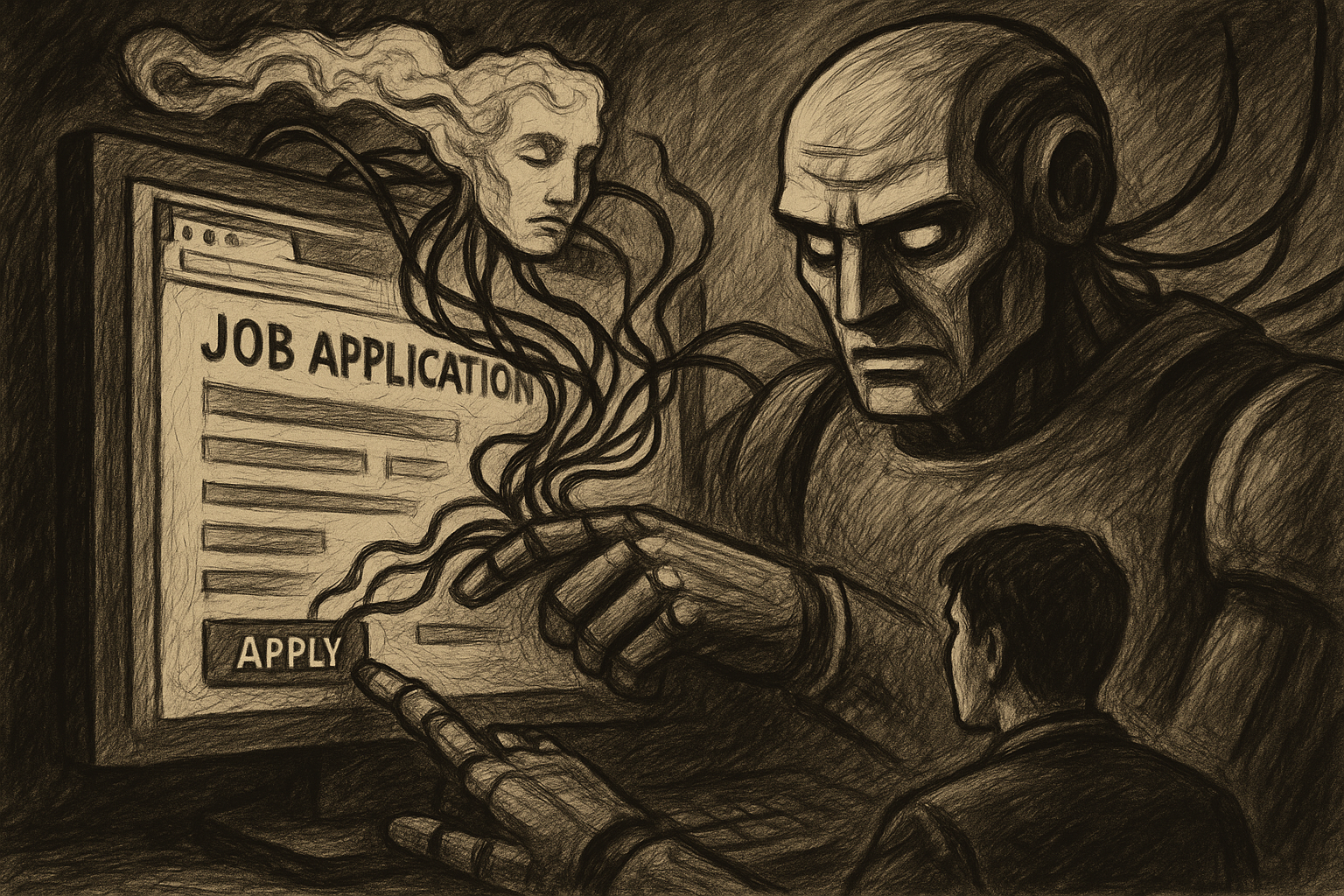

Using Browser Agents Powered by LLMs to Scrape Data from Job Application Forms

Estimated reading time: 8 minutes

- LLM-Powered Automation: Recent advancements in browser agents are transforming data scraping from job application forms.

- Dynamic Adaptation: LLMs exhibit near-human adaptability in navigating web forms with diverse layouts.

- Data Privacy Concerns: Companies must navigate tightening regulations while leveraging scraping technology.

- Sophisticated Use Cases: Trends show increasing utilization of scraped job data for labor market analytics and competitive intelligence.

- Compliance Strategies: Organizations are urged to adopt privacy-aware scraping protocols to mitigate legal risks.

Table of Contents

- Industry Trends & Background

- Insights from Recent Research

- Addressing the Challenges: Technical, Legal, & Ethical

- Real-World Best Practices

- Practical Takeaways

- Final Thoughts & Take Your Next Step

- FAQ

Industry Trends & Background

How Browser Agent LLMs are Redefining Automation

The process of scraping data from job application forms has leapt forward with the advent of browser agents powered by large language models (LLMs). If you’re tracking labor market shifts, building intelligent recruitment pipelines, or seeking to streamline your business’s talent acquisition workflow, the ability to extract structured information from complex online forms at scale is now more accessible—and more nuanced—than ever before.

In 2025, AI agents aren’t just answering questions; they’re autonomously completing entire workflows. LLMs, such as LLaMA 3, GPT-4, and their successors, can now interpret, manipulate, and extract information from HTML structures, rendered JS content, and highly variable web forms with near-human adaptability.

This wave of intelligent browser automation is powered by major advancements in technologies including:

- LLM-Driven Automation: LLMs parse content, understand context, and control browser navigation, clicking, and data entry.

- Flexible Browser Agents: Open-source frameworks like PulsarRPA and VimGPT, and commercial tools like Firecrawl and Bright Data Scraping Browser enable interaction with JavaScript-heavy forms.

- Human-Like Behavior: To evade anti-bot detection, agents randomize mouse movements, introduce scrolling delays, and rotate proxies, mimicking genuine users.

The result? LLM-powered agents extract critical data from forms previously inaccessible to rule-based scrapers. They’re powering job aggregators, automating applicant tracking for both recruiters and job seekers, and driving sophisticated labor market analytics.

Why the Surge in 2025?

The sheer complexity and dynamism of job application forms—often guarded by CAPTCHAs, behavioral checks, and ever-changing layouts—demand a new kind of automation. Platforms like LangChain, LangGraph, and LlamaIndex have become the standard for building sophisticated LLM agents.

Nearly 40% of organizations are planning deeper LLM integration in their operations, automating everything from customer interactions to recruitment processes. Legacy scraping is giving way to intelligent workflows, while job application data—an unparalleled resource for labor analytics and competitive intelligence—is now within practical reach.

The Key Players and Technology Stack

Backed by new industry entrants and veterans alike, the technology stack for LLM-powered scraping includes:

- Development Frameworks: LangChain, LangGraph (for agent orchestration and chaining with browser tools)

- All-in-One Tools: Firecrawl, Bright Data Scraping Browser (ready-made, dynamic, LLM-powered scraping with anti-detection features)

- Agentic Frameworks: PulsarRPA, VimGPT (community-driven, supporting both text and vision-based tasks)

- Integration Libraries: BeautifulSoup, Selenium, Scrapy (for chaining with LLMs in Python and other environments)

- No-Code Platforms: User-facing tools that turn natural language requests directly into scraping workflows.

Combined, these innovations mean that not only developers, but also non-technical professionals, can automate extraction from dynamic job portals at scale.

Insights from Recent Research

Practical Applications Driving Value

The use cases for LLM-powered job form scraping are broad and impactful:

- Labor Market Analytics: Aggregating job posting data fuels salary benchmarks, trend forecasting, and skills gap analysis for HR consultancies and governments.

- Competitive Intelligence: Real-time monitoring of rivals’ hiring campaigns allows insights into expansion plans and evolving recruiting strategies.

- Job Aggregators: Search engines and job platforms index disparate listings to create comprehensive, searchable experiences.

- Applicant Tracking Automation: Job seekers use agents to monitor application status; recruiters track feedback and process candidates in bulk.

Beyond data extraction, some AI agents are now being trained to summarize and even interpret extracted data, transforming raw information into actionable business intelligence.

Addressing the Challenges: Technical, Legal, & Ethical

For every breakthrough, new challenges have surfaced:

1. Sophisticated Anti-Bot Defenses

Modern job forms deploy CAPTCHAs, device fingerprinting, and real-time behavioral analysis. Browser agents must continually “level up”—using headless browsers, dynamic mouse patterns, and smart proxy rotation. Still, no solution is perfect; anti-bot arms races have become a fixture in the industry.

2. Dynamic, Changing Layouts

Job application layouts often change, sometimes automatically adjusting according to user profile or location. LLMs mitigate this by understanding intent from labels and semantic structure, not just static HTML.

3. Data Quality and Hallucinations

While LLM agents excel at context interpretation, they can hallucinate—fabricating information or misreading ambiguous form fields. Employing techniques like Retrieval Augmented Generation (RAG) helps reduce these risks, using external data sources to “ground” agent responses.

4. Compliance and Privacy Risks

- Terms of Service (ToS) Violations: Most job boards expressly forbid automated scraping. Non-compliance risks IP bans, legal threats, or blocked accounts.

- Data Privacy Laws: Regulations such as GDPR and CCPA impose rigid requirements on storing and processing personal data. Any data scraping that risks capturing names, emails, or resumes must implement robust consent and security measures.

- Sensitive Data Exposure: Unauthorized or careless extraction and storage of identifiable applicant data risks major privacy breaches.

Best practices now include hosting agents locally (to avoid cloud data leaks), integrating privacy-by-design features (automated redaction, consent management), and maintaining detailed audit logs.

5. Scalability and Maintenance

While “scraping is easy, scaling isn’t.” Distributing scraping jobs across rotating proxies, handling rate limits, and keeping up with the anti-bot race are ongoing operational burdens. New enterprise players are offering low-code/LlM-powered enhancements to simplify scaling, but technical debt can mount quickly.

Real-World Best Practices

What lessons have AI and automation leaders learned in the field?

- Use Expert Oversight: Combine LLM agents with expert human feedback, particularly when extracting complex or regulated data sets.

- Limit Personal Data: Scrape only what is essential for your legitimate business purpose, minimizing risk and exposure.

- Adopt Compliance Tools: Choose platforms that integrate privacy and compliance features out-of-the-box.

- Fine-Tune with RAG: Ground your LLM with Retrieval Augmented Generation to increase both efficacy and accuracy.

- Enable Flexible Workflows: Develop n8n-style automations that chain scraping, summarization, and data delivery, increasing end-to-end value and efficiency.

Practical Takeaways

- Integration, Not Isolation: The most valuable scraping solutions now blend LLMs, browser agents, and classic tools (Selenium, Scrapy) via frameworks like LangChain.

- Privacy Is Non-Negotiable: GDPR and global standards make privacy a strategic, not just technical, concern.

- Adapt to Anti-Bot Evolution: Automated mouse movement, dynamic page rendering, and proxy rotation are table stakes.

- Scale Responsibly: Large-scale scraping requires distributed architecture, regular maintenance, and constant testing.

- Stay Informed: The landscape is dynamic: make continual investments in both technical capability and compliance knowledge.

Final Thoughts & Take Your Next Step

As LLM-powered browser agents reshape how businesses engage with online job application data, the opportunities for labor market insight, workflow automation, and competitive advantage are immense.

Smart organizations aren’t just adopting new scraping technology—they’re building intelligent, privacy-aware automation pipelines that create real business value while honoring legal boundaries and user trust.

Ready to harness the next evolution in AI automation? Explore how our AI consulting and workflow design services can help you implement best-in-class, responsible browser agent solutions for your business goals. Reach out to discuss your use case or learn more about building privacy-aware, high-impact automations tailored to the future of work.

FAQ

Q1: What are the primary advantages of using LLM-powered browser agents for scraping?

A1: They offer enhanced adaptability to complex web forms, improved data extraction capabilities, and the ability to navigate sophisticated anti-bot systems.

Q2: Are there legal risks associated with scraping job application forms?

A2: Yes, organizations must comply with terms of service and data privacy laws to avoid legal repercussions.

Q3: How can companies ensure compliance when using scraping technology?

A3: Implement robust consent mechanisms, data protection measures, and regularly review compliance with legal requirements.

References & Further Reading:

- BrightData: Web Scraping with LLaMA 3

- ProjectPro: Web Scraping with LLMs

- Firecrawl: AI-Powered Web Scraping Solutions for 2025

- AI Multiple: Open Source Web Agents

- God of Prompt: Easiest AI Web Scraping Method for 2025

- V7 Labs: Best LLM Applications in 2025

- Apify: LLM Agents in 2025

- Zyte: 2025 Web Scraping Industry Report

- Towards AI: The 2025 U.S. Data Job Market

- EDPB: AI Privacy Risks and Mitigations